The AI developer platform

Build models faster, fine-tune LLMs, develop GenAI applications with confidence, all in one system of record developers are excited to use.

The era of generative AI

The world’s leading AI teams trust Weights & Biases

A system of record developers want to use

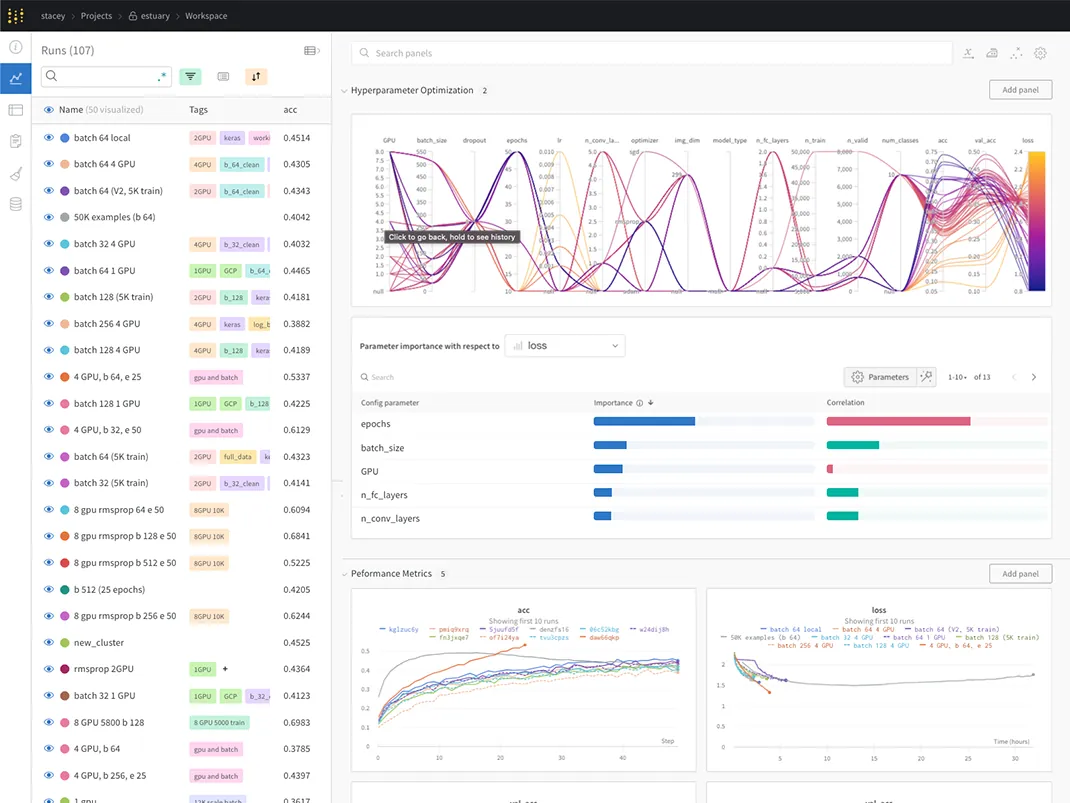

Experiments

Track and visualize your ML experiments

Sweeps

Optimize your

hyperparameters

Launch

Package and run your

ML workflow jobs

Model Registry

Register and manage

your ML models

Automations

Trigger workflows

automatically

Models

Build & Fine-

tune models

Traces

Monitor and debug

LLMs and prompts

Weave

Develop GenAI

applications

Evaluations

Rigorous evaluations

of GenAI applications

Integrate quickly, track & version automatically

- Track, version and visualize with just 5 lines of code

- Reproduce any model checkpoints

- Monitor CPU and GPU usage in real time

“We’re now driving 50 or 100 times more ML experiments versus what we were doing before.”

import wandb

# 1. Start a W&B run

run = wandb.init(project="my_first_project")

# 2. Save model inputs and hyperparameters

config = wandb.config

config.learning_rate = 0.01

# 3. Log metrics to visualize performance over time

for i in range(10):

run.log({"loss": loss})

import wandb

import os

# 1. Set environment variables for the W&B project and tracing.

os.environ["LANGCHAIN_WANDB_TRACING"] = "true" os.environ["WANDB_PROJECT"] = "langchain-tracing"

# 2. Load llms, tools, and agents/chains

llm = OpenAI(temperature=0)

tools = load_tools(["llm-math"], llm=llm)

agent = initialize_agent(

tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True

)

# 3. Serve the chain/agent with all underlying complex llm interactions automatically traced and tracked

agent.run("What is 2 raised to .123243 power?")

import wandb

from llama_index import ServiceContext

from llama_index.callbacks import CallbackManager, WandbCallbackHandler

# initialise WandbCallbackHandler and pass any wandb.init args

wandb_args = {"project":"llamaindex"}

wandb_callback = WandbCallbackHandler(run_args=wandb_args)

# pass wandb_callback to the service context

callback_manager = CallbackManager([wandb_callback])

service_context = ServiceContext.from_defaults(callback_manager=

callback_manager)

import wandb

# 1. Start a new run

run = wandb.init(project="gpt5")

# 2. Save model inputs and hyperparameters

config = run.config

config.dropout = 0.01

# 3. Log gradients and model parameters

run.watch(model)

for batch_idx, (data, target) in enumerate(train_loader):

...

if batch_idx % args.log_interval == 0:

# 4. Log metrics to visualize performance

run.log({"loss": loss})

import wandb

# 1. Define which wandb project to log to and name your run

run = wandb.init(project="gpt-5",

run_name="gpt-5-base-high-lr")

# 2. Add wandb in your `TrainingArguments`

args = TrainingArguments(..., report_to="wandb")

# 3. W&B logging will begin automatically when your start training your Trainer

trainer = Trainer(..., args=args)

trainer.train()

from lightning.pytorch.loggers import WandbLogger

# initialise the logger

wandb_logger = WandbLogger(project="llama-4-fine-tune")

# add configs such as batch size etc to the wandb config

wandb_logger.experiment.config["batch_size"] = batch_size

# pass wandb_logger to the Trainer

trainer = Trainer(..., logger=wandb_logger)

# train the model

trainer.fit(...)

import wandb

# 1. Start a new run

run = wandb.init(project="gpt4")

# 2. Save model inputs and hyperparameters

config = wandb.config

config.learning_rate = 0.01

# Model training here

# 3. Log metrics to visualize performance over time

with tf.Session() as sess:

# ...

wandb.tensorflow.log(tf.summary.merge_all())

import wandb

from wandb.keras import (

WandbMetricsLogger,

WandbModelCheckpoint,

)

# 1. Start a new run

run = wandb.init(project="gpt-4")

# 2. Save model inputs and hyperparameters

config = wandb.config

config.learning_rate = 0.01

... # Define a model

# 3. Log layer dimensions and metrics

wandb_callbacks = [

WandbMetricsLogger(log_freq=5),

WandbModelCheckpoint("models"),

]

model.fit(

X_train, y_train, validation_data=(X_test, y_test),

callbacks=wandb_callbacks,

)

import wandb

wandb.init(project="visualize-sklearn")

# Model training here

# Log classifier visualizations

wandb.sklearn.plot_classifier(clf, X_train, X_test, y_train, y_test, y_pred, y_probas, labels,

model_name="SVC", feature_names=None)

# Log regression visualizations

wandb.sklearn.plot_regressor(reg, X_train, X_test, y_train, y_test, model_name="Ridge")

# Log clustering visualizations

wandb.sklearn.plot_clusterer(kmeans, X_train, cluster_labels, labels=None, model_name="KMeans")

import wandb

from wandb.xgboost import wandb_callback

# 1. Start a new run

run = wandb.init(project="visualize-models")

# 2. Add the callback

bst = xgboost.train(param, xg_train, num_round, watchlist, callbacks=[wandb_callback()])

# Get predictions

pred = bst.predict(xg_test)

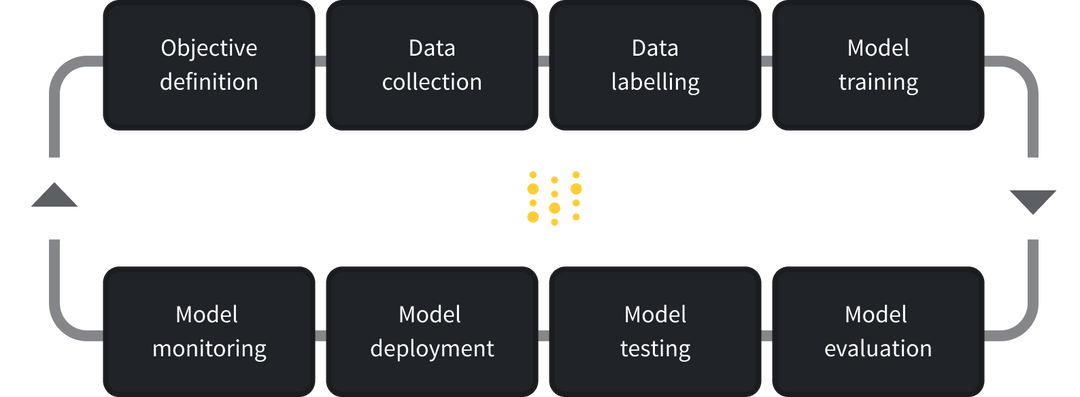

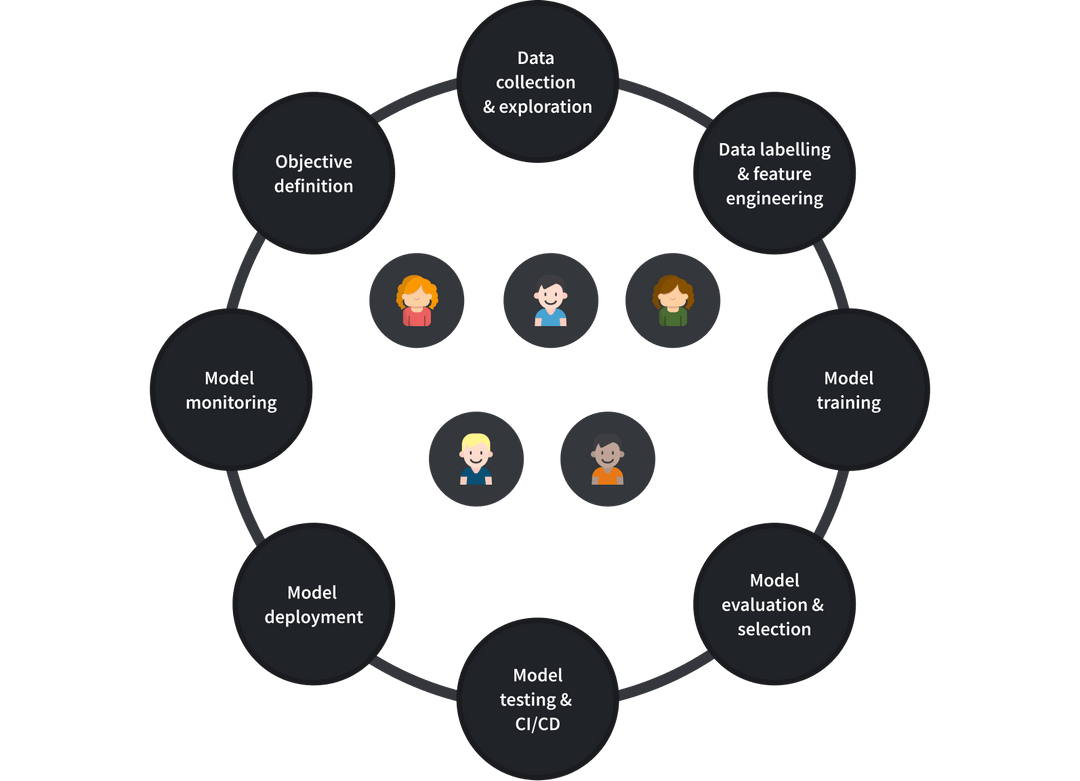

The leading AI developer platform that provides value to your entire team

The user experience that makes redundant work disappear

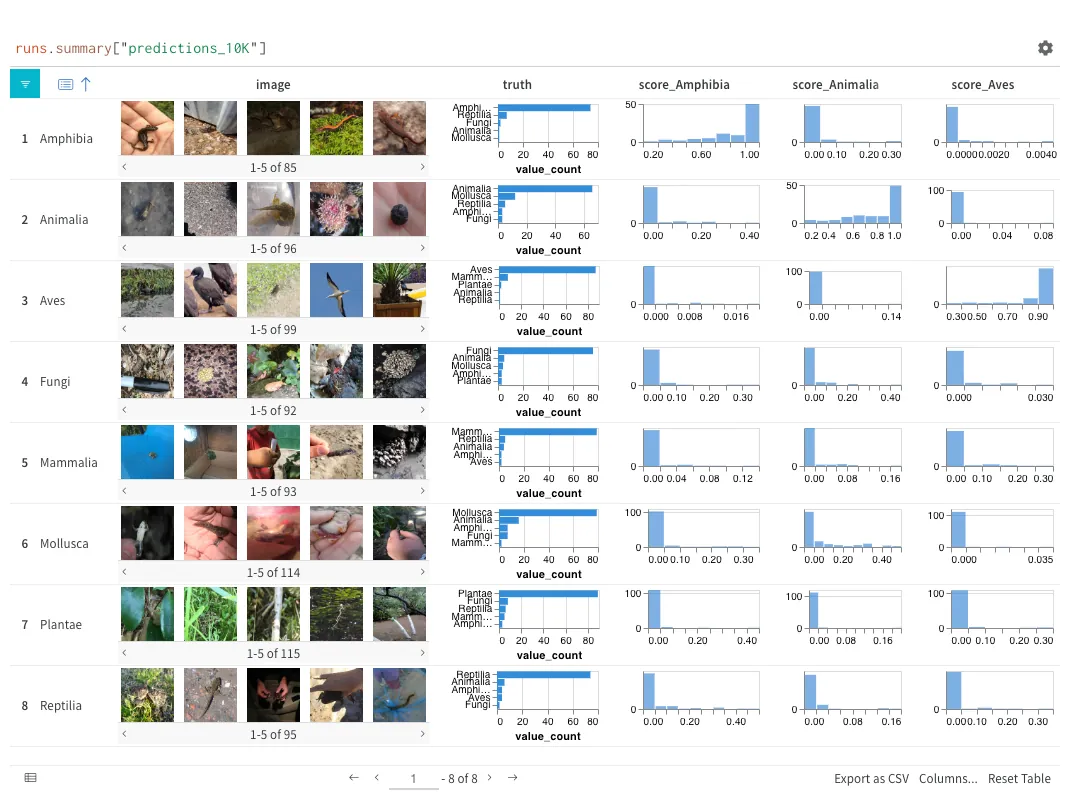

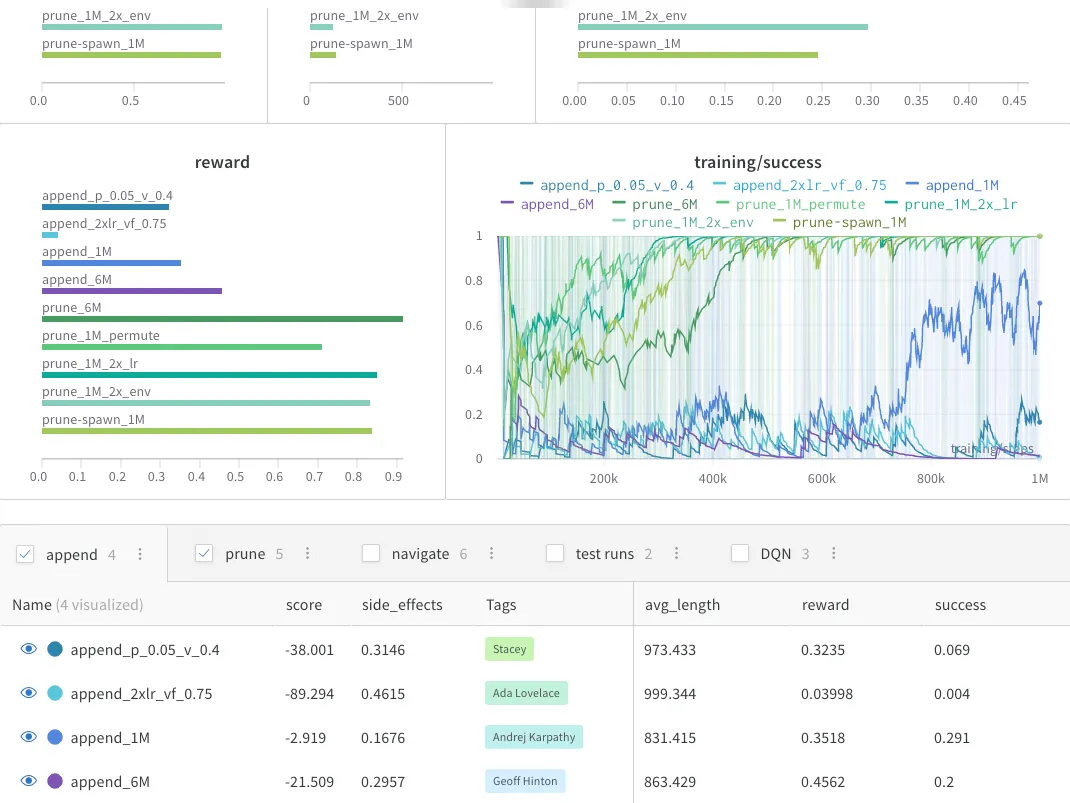

Track every detail of your ML pipeline automatically. Visualize results with relevant context. Drag & drop analysis to uncover insights – your next best model is just a few clicks away

The ML workflow co-designed with ML engineers

Build streamlined ML workflows incrementally. Configure and customize every step. Leverage intelligent defaults so you don’t have to reinvent the wheel.

A system of record that makes all histories reproducible and discoverable

Reproduce any experiment instantly. Track model evolution with changes explained along the way. Easily discover and build on top of your team’s work.

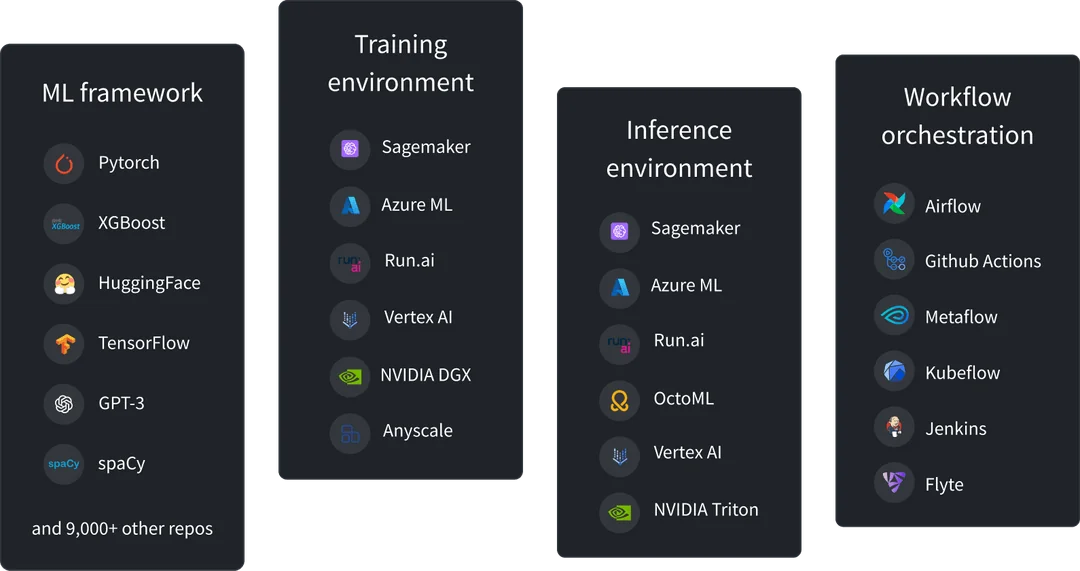

Flexible deployments, easy integration

Deploy W&B to your infrastructure of choice, W&B-managed or Self-managed available. Easily integrate with your ML stack & tools with no vendor lock-in.

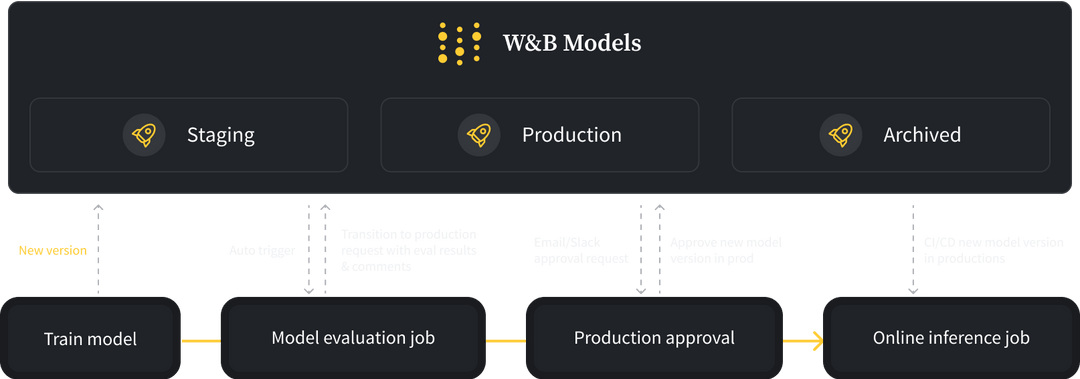

Bridge ML Practitioners and MLOps

Automate and scale ML workloads in one collaborative interface – ML practitioners get the simplicity, MLOps get the visibility.

Scale ML production with governance

The user experience that makes redundant work disappear

Track every detail of your ML pipeline automatically. Visualize results with relevant context. Drag & drop analysis to uncover insights – your next best model is just a few clicks away

Any industry, any use case

Customers from diverse industries trust W&B with a variety of ML use cases. From autonomous vehicle to drug discovery and from customer support automation to generative AI, W&B’s flexible workflow handles all your custom needs.

Let the team focus on value-added activities

Only focuses on core ML activities – W&B automatically take care of boring tasks for you: reproducibility, auditability, infrastructure management, and security & governance.

Future-proof your ML workflow – W&B co-designs with OpenAI and other innovators to encode their secret sauce so you don’t need to reinvent the wheel.

Designed to help software developers deploy GenAI applications with confidence

The tools developers need to evaluate, understand and iterate on dynamic, non-deterministic large language models.

Automatically log all inputs, outputs and traces for simple debugging

Weave captures all input and output data and builds a tree to give developers full observability and understanding about how data flows through their applications.

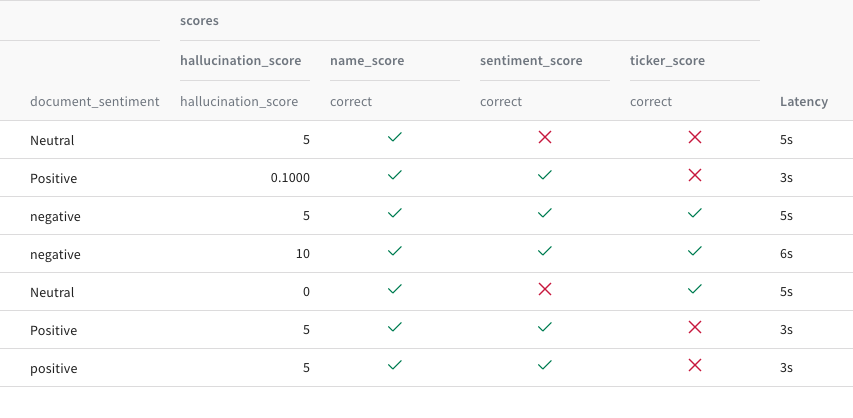

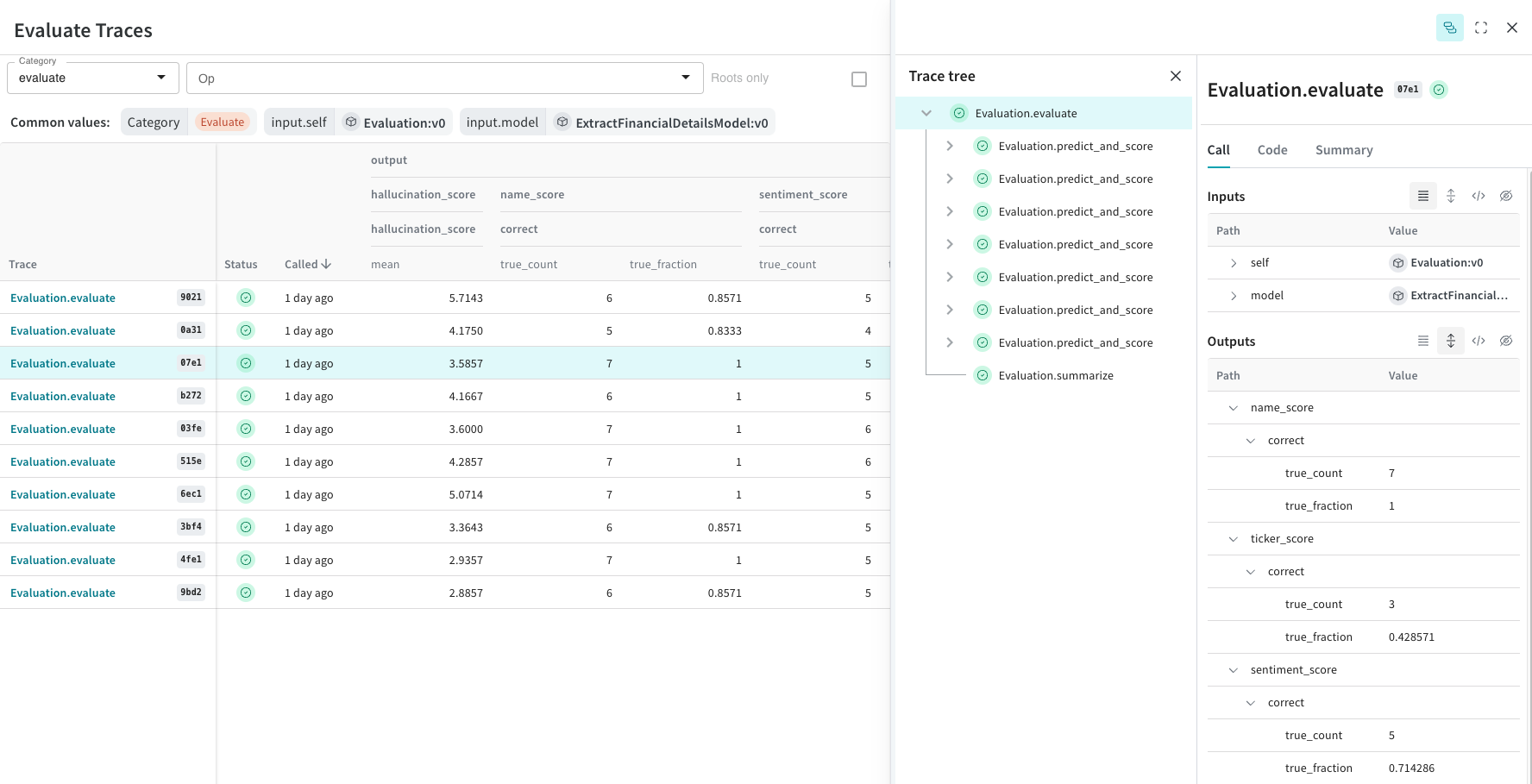

Rigorous evaluation frameworks to deliver robust LLM performance

Compare different evaluations of model results against different dimensions of performance to ensure applications are as robust as possible when deploying to production.